Implementing Blum's: Conscious Turing Machines

Author

Swarup Tripathy

Title

Implementing Blum's: Conscious Turing Machines

Description

Building an architecture towards the implementation of dynamics of a Conscious Turing Machine

Category

Essays, Posts & Presentations

Keywords

Consciousness, Turing Machines, Cellular Automaton, Chunks, Gists, Weight, Time, Sleeping Experts Algorithm, Up-Tree competition, Intensity, Mood, STM, LTM, Blum

URL

http://www.notebookarchive.org/2021-07-61dzgpq/

DOI

https://notebookarchive.org/2021-07-61dzgpq

Date Added

2021-07-13

Date Last Modified

2021-07-13

File Size

0.59 megabytes

Supplements

Rights

CC BY-NC-SA 4.0

WOLFRAM SUMMER SCHOOL 2021

Implementing Blum’s: Conscious Turing Machines [CTM’s]

Implementing Blum’s: Conscious Turing Machines [CTM’s]

Swarup Tripathy

Electrical and Electronics Engineering, Vellore Institute of Technology, Vellore

To build a mathematical architecture for the Conscious Turing Machines [CTM’s] as proposed by Manuel Blum and Lenore Blum in their paper “A theoretical Computer Science [TCS] Perspective on Consciousness” where they describe CTM as Conscious AI inspired from the Theatrical Analogy of Bernard Baars. Further, CTM’s aren’t complex model of the brain but a simple approach towards consciousness from the perspective of Theoretical Computer Science.

The architecture would follow a deterministic approach where the inner broadcasts generated by CTM’s special unconscious processors or the speech, vision, state, touch and/or whatever else [but here we are dealing with real numbers] compete towards reaching the STM [Short Term Memory] which then becomes the entirety of CTM’s conscious content.

Our Project deals with the implementation of dynamics of Conscious Turing Machines and rather than dealing with a conscious state.

What is meant is Clear !

The architecture would follow a deterministic approach where the inner broadcasts generated by CTM’s special unconscious processors or the speech, vision, state, touch and/or whatever else [but here we are dealing with real numbers] compete towards reaching the STM [Short Term Memory] which then becomes the entirety of CTM’s conscious content.

Our Project deals with the implementation of dynamics of Conscious Turing Machines and rather than dealing with a conscious state.

What is meant is Clear !

What and How, about CTM’s ?

What and How, about CTM’s ?

What is Consciousness?

What is Consciousness?

Wikipedia states ~ Consciousness, at its simplest, is sentience or awareness of internal and external existence. Despite millennia of analyses, definitions, explanations and debates by philosophers and scientists, consciousness remains puzzling and controversial, being “at once the most familiar and also the most mysterious aspect of our lives”.

Manuel Blum and Lenore Blum states ~ What we mean by Consciousness is Conscious Awareness, everything you are aware of while you are awake or dreaming and also what you see, what you hear and most importantly your own private inner speech because you all speak to yourself a lot of the time in your head and that’s what we are conscious of.

Manuel Blum and Lenore Blum states ~ What we mean by Consciousness is Conscious Awareness, everything you are aware of while you are awake or dreaming and also what you see, what you hear and most importantly your own private inner speech because you all speak to yourself a lot of the time in your head and that’s what we are conscious of.

What is ConsciousTuringMachines ?

What is ConsciousTuringMachines ?

CTM’s are machines that express their feelings and not just simulate them. The CTM is proposed to express the understanding of neuroscientist Bernard Baars Theater Model and then providing a framework to understand consciousness. It is a simple mathematical model which follows a 7 tuple system comprising of <STM, LTM, Up-Tree, Down-Tree, Links, Input, Output> having a deterministic and probabilistic approach.

What is the Theater Analogy ?

What is the Theater Analogy ?

Cognitive Neuroscientist Bernard Baars proposed a Global Workspace Theory [GWT] of the brain and gave his understanding of consciousness. He described the analogy as the activity of actors in a play performing on the stage of Working Memory, their performance under the observation by a huge audience of unconscious processors.

How CTM is different from the GWT ?

How CTM is different from the GWT ?

GWT may comprise of several actors in the stage but CTM will always have just one and the same actor always on the stage. That actor can ask or answer any question or communicate any sort of information. Any member in the audience with a response to any question, comment or request of its own, can send the actor their script through a well defined competition.

How does CTM works ?

How does CTM works ?

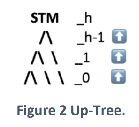

If we compare the CTM to Baars theater Model, Short Term Memory[STM] is the stage and there is always one and the same actor on the stage. At every step in time, that actor gets handed the winning chunk as a script for broadcast. The Down-Tree works as a broadcast system whereas the Up-Tree works as a competition process, and finally the Long Term Memory [LTM] is the audience of processors, each competing to get their chunk to the stage[STM]. The constant presence of chunks competing to get up into STM, together with the continual broadcasting of successive winners down to LTM, produces the stream of consciousness.

Important Terms

Important Terms

◼

STM or Short Term Memory ~

◼

Holds exactly one winner chunk which then becomes your consciousness content

◼

LTM or Long Term Memory ~

◼

A large collection of “N” processors whose working are all unconscious

◼

N = processors which produce chunks

k

10

◼

Chunk ~

◼

6 tuple, < address, time, gist, weight, intensity, mood >

◼

Gist ~

◼

Multimodal thoughts

◼

Your Dreams are manufactured completely by gists.

◼

It can deal with images, sounds, sensations and thoughts

◼

Weight ~

◼

How important it is to get that chunk into STM

◼

Positive - optimistic/cheerful

◼

Negative - pessimistic/depressing

◼

Intensity ~

◼

The importance that processors assigns to gist.

◼

So each processor has it’s own “inner” language for it’s own personal internal communication

◼

The processors could further be Google, Wolfram Alpha, Siri, AlphaGo etc.

◼

Input Maps

◼

Something that our inner processor receives from the outer world via sensors

◼

Output Maps

◼

Something which is transmitted to the outer world or our surrounding environment from the inner processing of the body.

Setting up the Architecture Dynamics

Setting up the Architecture Dynamics

To build a mathematical architecture for the Conscious Turing Machines [CTM’s] as proposed by Manuel Blum and Lenore Blum in their paper “A theoretical Computer Science [TCS] Perspective on Consciousness” where they describe CTM as Conscious AI inspired from the Theatrical Analogy of Bernard Baars. Further, CTM’s aren’t complex model of the brain but a simple approach towards consciousness from the perspective of Theoretical Computer Science.

The architecture would follow a deterministic approach where the inner broadcasts generated by CTM’s special unconscious processors or the speech, vision, state, touch and/or whatever else [but here we are dealing with real numbers] compete towards reaching the STM [Short Term Memory] which then becomes the entirety of CTM’s conscious content.

Our Project deals with the implementation of dynamics of Conscious Turing Machines and rather than dealing with a conscious state.

What is meant is Clear !

The architecture would follow a deterministic approach where the inner broadcasts generated by CTM’s special unconscious processors or the speech, vision, state, touch and/or whatever else [but here we are dealing with real numbers] compete towards reaching the STM [Short Term Memory] which then becomes the entirety of CTM’s conscious content.

Our Project deals with the implementation of dynamics of Conscious Turing Machines and rather than dealing with a conscious state.

What is meant is Clear !

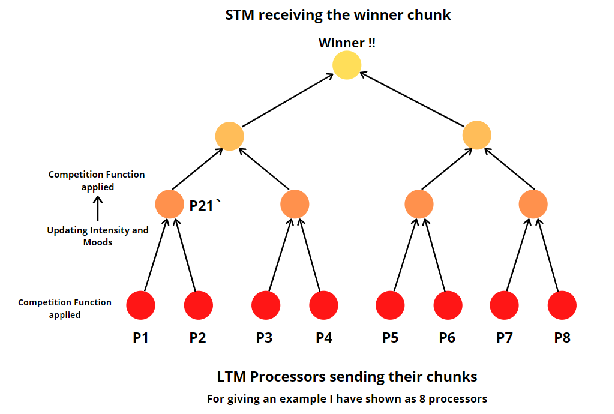

Up-Tree Competition

Up-Tree Competition

The processors are producing chunks and the purpose of the Up-Tree competition is to find the chunk to focus on.

This is the architecture that we are following for the CTM model to give the readers more visualisation before we move on the code aspect of CTMs.

This is the architecture that we are following for the CTM model to give the readers more visualisation before we move on the code aspect of CTMs.

In[]:=

Determining the competition function

Determining the competition function

◼

example of competition function is f(chunk) = intensity + Mood/2

In[]:=

competitionFunction[chunk_]:=chunk["Intensity"]+chunk["Mood"]/2

Producing Random chunks

Producing Random chunks

◼

chunk < address, t, gist, weight, intensity, mood > ------ Non Negative Real Number

◼

Weight denotes the real number (positive, negative or zero)

randomChunk function to produce random chunks with Intensities and Moods dependent on Weights where we only deal with gists and weights here

In[]:=

randomChunk[]:=With[{w=RandomReal[{-1,1}]},<|"Gist"->RandomReal[{-1,1},10],"Weight"->w|>]

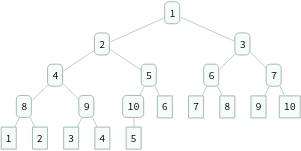

Generating the Up directed Binary Tree

Generating the Up directed Binary Tree

◼

Up-Tree is an Up directed Binary Tree

Building up the Binary Tree by stating a function with n_ such that the chunks could feed into it and then we get the final winner

In[]:=

initialTree=GraphTree[KaryTree[10,DirectedEdges->True]]

Out[]=

In[]:=

(*n=numberofprocessors*)generateUptree[n_]:=With[{tree=GraphTree[KaryTree[2n,DirectedEdges->True]]},TreeMap[Replace[MapIndexed[TreeData[#1]->First[#2]&,TreeLeaves[tree]]],tree]]

In[]:=

generateUptree[10]

Out[]=

Setting the Up-Tree competition Algorithm

Setting the Up-Tree competition Algorithm

◼

Evaluating which of the chunks associated with node children, if any of the children has the greatest value of f, and if both have the same value, which has the smallest address, and choosing that one.

◼

Summing the Intensity and moods of the chunks associated with node’s children and setting those sums to be the intensity and mood respectively of the chunk as node.

In[]:=

upTreeBranch[chunks_]:=Append[First[MinimalBy[MaximalBy[chunks,competitionFunction],#["Address"]&]],{"Intensity"->Total[chunks[[All,"Intensity"]]],"Mood"->Total[chunks[[All,"Mood"]]]}]

Building up the Conscious Turing Machine Function

Building up the Conscious Turing Machine Function

◼

Intensity = |Weight|

◼

Mood = weight

Before stating n, we define ltm to be local in the expression

rule ~ the association comprising a list of processors, up-Tree competition function, up-Tree algorithm

n ~ the number of steps to run for

init ~ initial condition for the chunks to be fed to all the processors

n ~ the number of steps to run for

init ~ initial condition for the chunks to be fed to all the processors

(This is a simplified version which is not using Sleeping Experts Algorithm to modify weights. A more complete version is in the below Section.)

Here we define a CTM function to tell that rule_ will be applied to init from 0 through n times

In[]:=

ConsciousTuringMachine[rule_,init_,n_]:=NestList[ConsciousTuringMachine[rule,#]&,init,n]

In[]:=

(*Sinceltmisassignedasalocalvariablesoit'lltendtopassthroughseveralexpressionswhicharedefinedhere.*)ConsciousTuringMachine[rule_,init_]:=Module[{ltm},ltm=MapIndexed[Append[#[init],"Address"->#2[[1]]]&,rule["Processors"]];TreeFold[{upTreeBranch[#2]&,Append[ltm[[#]],{"Intensity"->Abs[ltm[[#,"Weight"]]],"Mood"->ltm[[#,"Weight"]]}]&},rule["UpTree"]]]

Run on a simple example running random processors.

In[]:=

ConsciousTuringMachine[<|"Processors"->Table[randomChunk[]&,5],"UpTree"->generateUptree[5]|>,randomChunk[],5]

Out[]=

{Gist{0.735526,-0.897281,-0.309918,-0.669619,-0.116018,-0.635653,-0.0804484,-0.990653,-0.377546,0.206612},Weight0.450654,Gist{-0.548359,0.774481,0.116679,0.00815551,-0.524323,0.59349,-0.289006,0.486165,0.898483,0.422255},Weight0.853602,Address2,Intensity2.90084,Mood0.126655,Gist{-0.0439214,-0.738866,-0.447385,0.401511,-0.204051,-0.417469,-0.564877,-0.903288,-0.882245,-0.977079},Weight0.48178,Address2,Intensity2.22425,Mood-1.26069,Gist{-0.922276,0.575881,0.549482,0.373261,0.439456,-0.393632,0.644457,-0.921257,0.136753,-0.515808},Weight0.942274,Address5,Intensity3.14199,Mood2.59194,Gist{-0.998119,-0.716091,-0.338204,0.76582,0.448729,0.221522,0.757214,0.883833,-0.230628,0.766618},Weight-0.845344,Address3,Intensity2.09644,Mood-2.09644,Gist{0.843537,-0.961403,-0.201403,0.936282,-0.686735,0.433958,-0.754054,0.140885,-0.299451,0.695504},Weight0.964075,Address4,Intensity2.09827,Mood1.34822}

Generating Sleeping Experts

Generating Sleeping Experts

What is Sleeping Experts Algorithm?

A crucial learning algorithm employed by the LTM processors for correcting the errors which generated faulty chunks. A fact that each and every processor tends to keep a record of all the chunks it has ever submitted to the competition.

Stating the words of Manuel and Lenore Blum from their paper

A processor p learns(via broadcasts from STM, links or otherwise) that it’s submission to the competition (whether or not that submission reached STM) was right or wrong and provided that the submission has not yet been checked off, p does the following:

A crucial learning algorithm employed by the LTM processors for correcting the errors which generated faulty chunks. A fact that each and every processor tends to keep a record of all the chunks it has ever submitted to the competition.

Stating the words of Manuel and Lenore Blum from their paper

A processor p learns(via broadcasts from STM, links or otherwise) that it’s submission to the competition (whether or not that submission reached STM) was right or wrong and provided that the submission has not yet been checked off, p does the following:

1

.If what got to STM was right, then p does nothing

2

.If what got to STM was wrong, and

◼

if p was right at time t, then

◼

p promotes itself i.e. increases its intensity giving power (multiplying by 1.5)

◼

If p was wrong at time t, then

◼

p corrects its error to the extent it can (e.g. her name was Tina, so it did not begin with S)

◼

p then demotes itself, i.e. lowers its intensity giving ability (multiplying by 0.5)

Now we define a function such that will generate the Up-Tree and the Expert if they are not provided

In[]:=

canonicalisedRule[rule_Association]:=If[KeyExistsQ[rule,"UpTree"]&&KeyExistsQ[rule,"Expert"],rule,Join[<|"UpTree"->generateUptree[Length[rule["Processors"]]],"Expert"->(1&)|>,rule]]canonicalisedRule[rule_List]:=canonicalisedRule[Association["Processors"->rule]]

In[]:=

canonicalisedRule[Table[With[{rule=RandomInteger[255]},<|"Weight"->(*Count[CellularAutomaton[rule,#["Gist"]],1]*)1,"Gist"->CellularAutomaton[rule,#["Gist"]]|>&],5]]

Out[]=

UpTree ,Expert(1&),Processors{Association[Weight1,GistCellularAutomaton[215,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[143,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[128,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[231,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[139,#1[Gist]]]&}

,Expert(1&),Processors{Association[Weight1,GistCellularAutomaton[215,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[143,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[128,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[231,#1[Gist]]]&,Association[Weight1,GistCellularAutomaton[139,#1[Gist]]]&}

The following code from the previous section now involves new variables i.e. stmValue, valuableProcessors, newExpertWeights which needs to be localised.

So, what' s happening in the following code ?

◼

stmValue ~ Applying Expert Function to the processor which has reached the STM.

◼

valuableProcessors ~ We now apply the Expert Function to all the remaining processors which took part in the competition and select all the address of the p whose function value is more than the stmValue. {returns a list}

◼

newExpertWeights ~ Here, we do a simple thing i.e. to multiply the weights of the above list with 1.5 in order to increase their intensity giving power.

◼

Lastly, applying an If statement which says that if the length of the variable processors list is greater than 0, indicating that the processor which has reached to stm is false so it's weight should be divided by 2 i.e. lowering it's intensity giving ability.

In[]:=

ConsciousTuringMachine[rule_,init_,n_]:=With[{canonicalRule=canonicalisedRule[rule]},NestList[ConsciousTuringMachine[canonicalRule,#]&,init,n]]ConsciousTuringMachine[ruleI_,init_]:=Module[{ltm,stm,stmValue,valuableProcessors,newExpertWeights,rule},rule=canonicalisedRule[ruleI];ltm=MapIndexed[Append[#[init],"Address"->#2[[1]]]&,rule["Processors"]];ltm[[All,"Weight"]]*=init["ExpertWeights"];stm=TreeFold[{upTreeBranch[#2]&,Append[ltm[[#]],{"Intensity"->Abs[ltm[[#,"Weight"]]],"Mood"->ltm[[#,"Weight"]]}]&},rule["UpTree"]];stmValue=rule["Expert"][stm];valuableProcessors=Select[ltm,rule["Expert"][#]>stmValue&][[All,"Address"]];newExpertWeights=MapAt[#*1.5&,init["ExpertWeights"],List/@valuableProcessors];If[Length[valuableProcessors]>0,newExpertWeights[[stm["Address"]]]/=2];(*ifequalto0thenthechunkreachedtothestmistrueandifit'sgreaterthan0thenit'sfalse*)AppendTo[stm,"ExpertWeights"->newExpertWeights];stm]

Examples

Examples

Cellular Automaton

Cellular Automaton

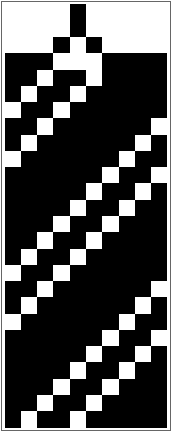

Finally in the end, we utilise the “Cellular Automaton” function to produce the gists and the weight assigned is 1, where the CA rule could be random.

Further, Expert counts the number of 1’s in the gists.

We are doing this for 25 iterations.

Further, Expert counts the number of 1’s in the gists.

We are doing this for 25 iterations.

In[]:=

ConsciousTuringMachine[<|"Processors"->Table[With[{rule=RandomInteger[255]},<|"Weight"->(*Count[CellularAutomaton[rule,#["Gist"]],1]*)1,"Gist"->CellularAutomaton[rule,#["Gist"]]|>&],5],"UpTree"->generateUptree[5],"Expert"->(Count[#["Gist"],1]&)|>,<|"Gist"->CenterArray[10],"Weight"->1,"ExpertWeights"->Table[1,5]|>,25]

Out[]=

Gist{0,0,0,0,1,0,0,0,0,0},Weight1,ExpertWeights{1,1,1,1,1},Weight1,Gist{0,0,0,0,1,0,0,0,0,0},Address1,Intensity5,Mood5,ExpertWeights,1.5,1,1.5,1.5,Weight1.5,Gist{1,1,1,1,0,1,1,1,1,1},Address2,Intensity6.,Mood6.,ExpertWeights,1.5,1,1.5,1.5,Weight1.5,Gist{0,0,0,0,1,0,0,0,0,0},Address2,Intensity6.,Mood6.,ExpertWeights{0.75,0.75,1,1.5,1.5},Weight1.5,Gist{0,0,0,0,1,1,0,0,0,0},Address4,Intensity5.5,Mood5.5,ExpertWeights{0.75,1.125,1,0.75,2.25},Weight2.25,Gist{1,1,1,1,1,0,1,1,1,1},Address5,Intensity5.875,Mood5.875,ExpertWeights{0.75,1.125,1,0.75,2.25},Weight2.25,Gist{0,0,0,0,0,0,1,0,0,0},Address5,Intensity5.875,Mood5.875,ExpertWeights{1.125,1.125,1,0.75,1.125},Weight1.125,Gist{0,0,0,0,0,0,1,0,0,0},Address1,Intensity5.125,Mood5.125,ExpertWeights{0.5625,1.6875,1,1.125,1.6875},Weight1.6875,Gist{1,1,1,1,1,1,0,1,1,1},Address2,Intensity6.0625,Mood6.0625,ExpertWeights{0.5625,1.6875,1,1.125,1.6875},Weight1.6875,Gist{0,0,0,0,0,0,1,0,0,0},Address2,Intensity6.0625,Mood6.0625,ExpertWeights{0.84375,0.84375,1,1.125,1.6875},Weight1.6875,Gist{1,1,1,1,1,1,0,1,1,1},Address5,Intensity5.5,Mood5.5,ExpertWeights{0.84375,0.84375,1,1.125,1.6875},Weight1.6875,Gist{0,0,0,0,0,0,0,1,0,0},Address5,Intensity5.5,Mood5.5,ExpertWeights{1.26563,0.84375,1,1.125,0.84375},Weight1.26563,Gist{0,0,0,0,0,0,0,1,0,0},Address1,Intensity5.07813,Mood5.07813,ExpertWeights{0.632813,1.26563,1,1.6875,1.26563},Weight1.6875,Gist{0,0,0,0,0,0,0,1,1,0},Address4,Intensity5.85156,Mood5.85156,ExpertWeights{0.632813,1.89844,1,0.84375,1.89844},Weight1.89844,Gist{1,1,1,1,1,1,1,0,0,1},Address2,Intensity6.27344,Mood6.27344,ExpertWeights{0.632813,0.949219,1,0.84375,2.84766},Weight2.84766,Gist{0,0,0,0,0,0,0,1,1,1},Address5,Intensity6.27344,Mood6.27344,ExpertWeights{0.949219,0.949219,1,0.84375,1.42383},Weight0.949219,Gist{0,0,0,0,0,0,0,0,1,0},Address1,Intensity5.16602,Mood5.16602,ExpertWeights{0.474609,1.42383,1,0.84375,2.13574},Weight2.13574,Gist{1,1,1,1,1,1,1,1,0,1},Address5,Intensity5.87793,Mood5.87793,ExpertWeights{0.474609,1.42383,1,0.84375,2.13574},Weight2.13574,Gist{0,0,0,0,0,0,0,0,0,1},Address5,Intensity5.87793,Mood5.87793,ExpertWeights{0.711914,1.42383,1,0.84375,1.06787},Weight1.42383,Gist{1,1,1,1,1,1,1,1,1,0},Address2,Intensity5.04736,Mood5.04736,ExpertWeights{0.711914,1.42383,1,0.84375,1.06787},Weight1.42383,Gist{0,0,0,0,0,0,0,0,0,1},Address2,Intensity5.04736,Mood5.04736,ExpertWeights{1.06787,0.711914,1,0.84375,1.06787},Weight1.06787,Gist{0,0,0,0,0,0,0,0,0,1},Address1,Intensity4.69141,Mood4.69141,ExpertWeights{0.533936,1.06787,1,1.26563,1.60181},Weight1.60181,Gist{1,1,1,1,1,1,1,1,1,0},Address5,Intensity5.46924,Mood5.46924,ExpertWeights{0.533936,1.06787,1,1.26563,1.60181},Weight1.60181,Gist{1,0,0,0,0,0,0,0,0,0},Address5,Intensity5.46924,Mood5.46924,ExpertWeights{0.800903,1.06787,1,1.26563,0.800903},Weight1.06787,Gist{0,1,1,1,1,1,1,1,1,1},Address2,Intensity4.9353,Mood4.9353,ExpertWeights{0.800903,1.06787,1,1.26563,0.800903},Weight1.06787,Gist{1,0,0,0,0,0,0,0,0,0},Address2,Intensity4.9353,Mood4.9353,ExpertWeights{1.20135,0.533936,1,1.26563,0.800903}

1

2

1

2

The following displays how the sleeping experts work on the processors whose gist depends on the “CellularAutomaton” function. You need to evaluate it for a couple of times.

In[]:=

cellImg=ConsciousTuringMachine[<|"Processors"->Table[With[{rule=RandomInteger[255]},<|"Weight"->(*Count[CellularAutomaton[rule,#["Gist"]],1]*)1,"Gist"->CellularAutomaton[rule,#["Gist"]]|>&],5],"Expert"->(Count[#["Gist"],1]&)|>,<|"Gist"->CenterArray[10],"Weight"->1,"ExpertWeights"->Table[1,5]|>,25];

In[]:=

ArrayPlot[cellImg[[All,"Gist"]]]

Out[]=

Testing the Sleeping Experts Algorithm for adjusting weights.

Testing the Sleeping Experts Algorithm for adjusting weights.

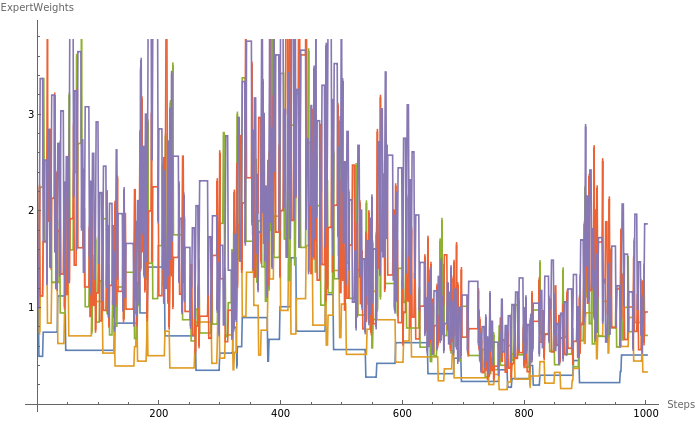

Run a CTM with 5 processors where each processor produces a chunk at each step whose gist is a random number with standard deviation equal to 1 and mean equal to index of that processor. The Expert function just rewards higher numbers in the gists, so we expect the experts to give weight to the higher numbered processors.

In[]:=

run=ConsciousTuringMachine[<|"Processors"->Table[With[{mean=m},<|"Gist"->RandomVariate[NormalDistribution[mean,1]],"Weight"->1|>&],{m,1,5}],"Expert"->(Abs[#["Gist"]]&)|>,<|"ExpertWeights"->Table[1,5]|>,1000]

Out[]=

ExpertWeights{1,1,1,1,1},Gist2.49424,Weight1,Address1,Intensity5,Mood5,ExpertWeights 1 2 1 2 ⋯996⋯ | |||||

|

This shows the weights that the experts applied to each of the processors over time. Note that the overall weight decreases.

In[]:=

ListLinePlot[Transpose@run[[All,"ExpertWeights"]],PlotLegendsAutomatic,AxesLabel->{"Steps","ExpertWeights"}]

Out[]=

|

|

Here we assign processor 1 to return a mean 1 and processor 2 to return a mean 2 and so on, with all the standard deviation set equal to 1

In[]:=

run=ConsciousTuringMachine[<|"Processors"->Table[With[{mean=m},<|"Gist"->RandomVariate[NormalDistribution[mean,1]],"Weight"->1|>&],{m,1,5}],"Expert"->(#["Gist"]&)|>,<|"ExpertWeights"->Table[1,5]|>,10000];

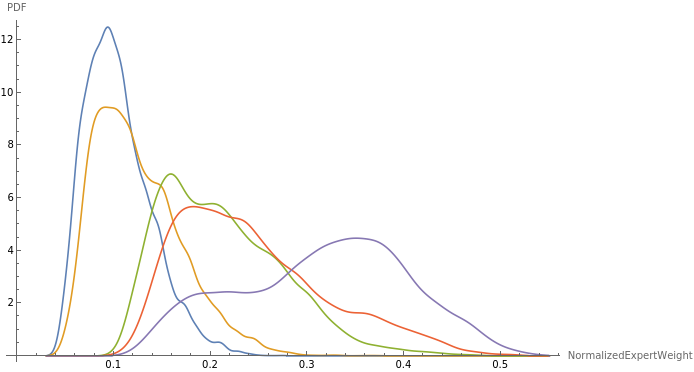

This is a histogram which displays the normalized expert weight vs PDF for each of the 5 processors. The Sleeping Experts Algorithm did not always assign the correct weights to the processors. We still couldn’t properly understand this unusual behaviour by the expert weights.

In[]:=

SmoothHistogram[Transpose[Normalize[#,Total]&/@run[[All,"ExpertWeights"]]],PlotLegendsAutomatic,AxesLabel{"NormalizedExpertWeight","PDF"}]

Out[]=

|

|

Now we take our standard deviation to be equal to 0.3 and then compare the obtained result with the previous one which indicates that having less standard deviation results in much clear distinction about the expert weight behaviour. Like in this example Sleeping Experts determined the weight of processor 5 highly and did not apply the weights properly to other processors.

In[]:=

run=ConsciousTuringMachine[<|"Processors"->Table[With[{mean=m},<|"Gist"->RandomVariate[NormalDistribution[mean,0.3]],"Weight"->1|>&],{m,1,5}],"Expert"->(#["Gist"]&)|>,<|"ExpertWeights"->Table[1,5]|>,10000];(*thegistinsidetheprocessorreturnswillbearandomnumber*)

In[]:=

SmoothHistogram[Transpose[Normalize[#,Total]&/@run[[All,"ExpertWeights"]]],PlotLegendsAutomatic]

Out[]=

|

Concluding remarks

Concluding remarks

We wanted to build an architecture that aims at simplicity rather than being complex in nature, for a simple model of consciousness rather than being a complex model of the brain. As stated by Blum’s in their paper that what gives rise to consciousness is the expressiveness of the brain’s inner language and the architecture of the system, basic processes and dynamics.

In our modeled architecture we are working with real numbers to display the variations at each stage of the competition among the chunks which are fed by the processors. This project does the following :

In our modeled architecture we are working with real numbers to display the variations at each stage of the competition among the chunks which are fed by the processors. This project does the following :

1

.It presents a simple mathematical model of Consciousness expressed formally as Conscious Turing Machines

2

.It defined the competition function and Up-Tree competition algorithm which is an important aspect for chunks to go through in a CTM.

3

.It further speaks about the conscious content of CTM to be whatever chunk is in STM and then defines conscious awareness by CTM to be the reception by all LTM processors of STM’s broadcast of that content.

4

.Here gists are cellular automaton with random rule and random list of 0’s and 1’s.

5

. This further involves the help of Sleeping Experts to compare and verify about the processor which reaches to STM for the ultimate broadcast.

6

. Further, in the end the Sleeping Experts Algorithm did not always assign the correct weights to the processors which was something to be noticed about.

Future Plans and Ideas

Future Plans and Ideas

My future plans involves the use of following probabilistic approach for the Conscious Turing Machines to work. In the current project we take help of cellular automata as something to generate random behaviour but we could also look out for inculcating Turing Machines as well.

Further, if you you reach out to the talk of Lenore Blum as mentioned in references, in the end she talks about “Change Blindness” with a scene shown. Since humans tend to give importance to the plot of the story here and not on what all was happening in the background with change in vase, tapestry behind, dead man position and etc. , I asked her what if we could propose a CTM which is aware about each and every particular object in the scene, would that slow down CTM or improve it’s behaviour?

Time to work and finding it out !

Further, if you you reach out to the talk of Lenore Blum as mentioned in references, in the end she talks about “Change Blindness” with a scene shown. Since humans tend to give importance to the plot of the story here and not on what all was happening in the background with change in vase, tapestry behind, dead man position and etc. , I asked her what if we could propose a CTM which is aware about each and every particular object in the scene, would that slow down CTM or improve it’s behaviour?

Time to work and finding it out !

Keywords

Keywords

◼

CTM’s or Conscious Turing Machines

◼

STM or the Short Term Memory

◼

LTM or the Long Term Memory

◼

Processors

◼

Chunk

◼

Gist

◼

Weight

◼

Intensity

◼

Up-Tree Competition Algorithm

◼

Sleeping Experts Algorithm

Acknowledgment

Acknowledgment

Mentor: Christopher Wolfram

From knowing Nothing to knowing Something, Christopher sir has played a very important role in the successful implementation of the project. Even though I disturbed him everyday with the meets, he was someone to guide and teach me a lot about the project and how we laughed at several moments will remain with me forever. I also want to mention my wonderful TA’s whose assistance was there for me at each and every second of the summer school.

I want to express my gratitude towards Manuel Blum Sir and Lenore Blum Ma’am whose work and guidance to us made me complete my project.

I also want to thank Mads Bahrami Sir for taking up my interview and also Erin Cherry Ma’am for her kind words throughout the summer school.

A whole hearted thank you to Swastik Banerjee who guided me to join the Wolfram Summer School in the first place.

And the person who made all this possible, Stephen Wolfram Sir. A person with immense knowledge showered his knowledge and experiences on us. I wish with all the experience I gained from this amazing summer school, I will make a difference one day.

Last but not the least the fellow students of the Summer School 2021 coming from different fields of science, from whom I learned a lot during the sessions.

Thank you Everyone !

An experience worth remembering.

From knowing Nothing to knowing Something, Christopher sir has played a very important role in the successful implementation of the project. Even though I disturbed him everyday with the meets, he was someone to guide and teach me a lot about the project and how we laughed at several moments will remain with me forever. I also want to mention my wonderful TA’s whose assistance was there for me at each and every second of the summer school.

I want to express my gratitude towards Manuel Blum Sir and Lenore Blum Ma’am whose work and guidance to us made me complete my project.

I also want to thank Mads Bahrami Sir for taking up my interview and also Erin Cherry Ma’am for her kind words throughout the summer school.

A whole hearted thank you to Swastik Banerjee who guided me to join the Wolfram Summer School in the first place.

And the person who made all this possible, Stephen Wolfram Sir. A person with immense knowledge showered his knowledge and experiences on us. I wish with all the experience I gained from this amazing summer school, I will make a difference one day.

Last but not the least the fellow students of the Summer School 2021 coming from different fields of science, from whom I learned a lot during the sessions.

Thank you Everyone !

An experience worth remembering.

References

References

Cite this as: Swarup Tripathy, "Implementing Blum's: Conscious Turing Machines" from the Notebook Archive (2021), https://notebookarchive.org/2021-07-61dzgpq

Download